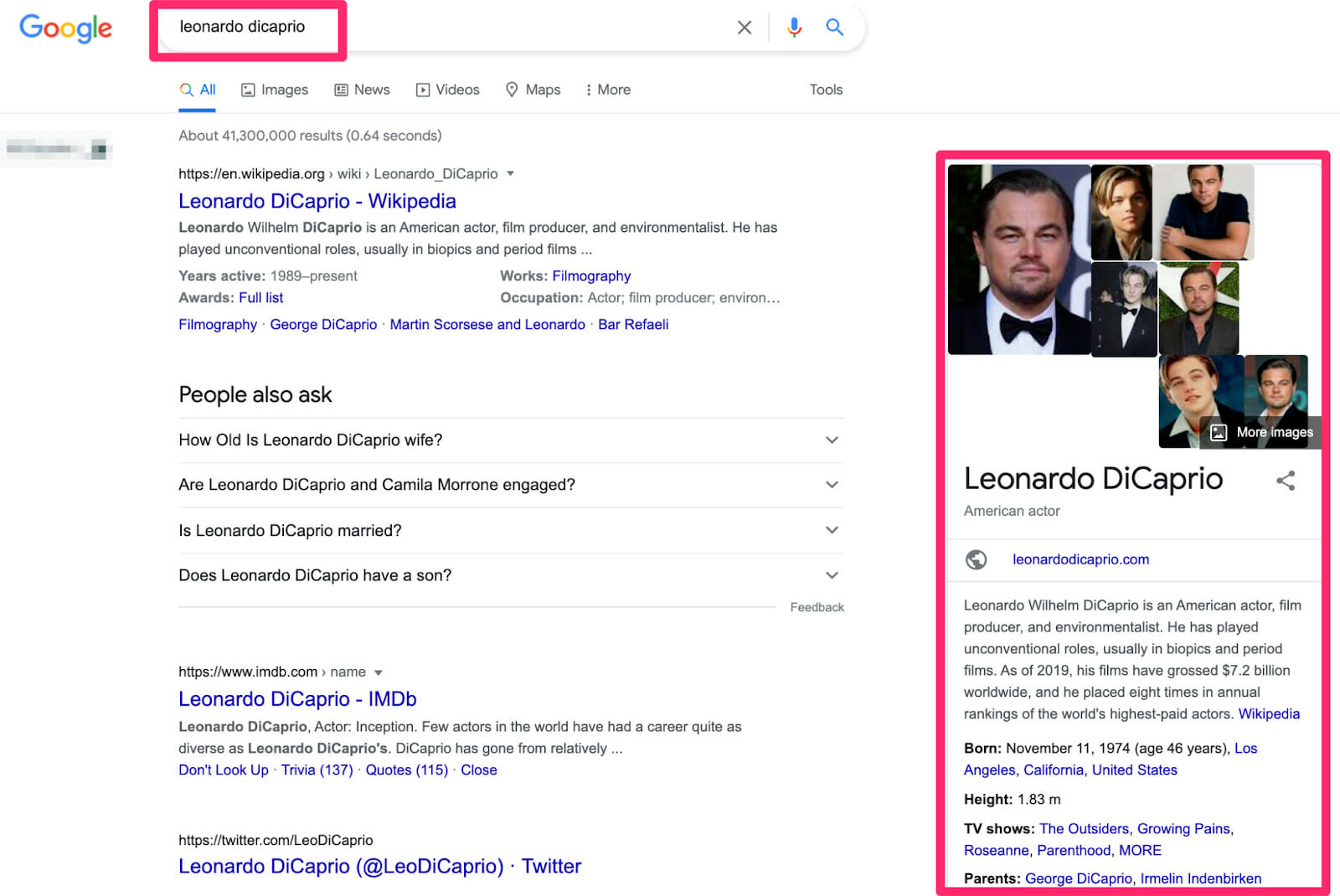

This summer is bringing a long-awaited — and perhaps long-threatened — Google search algorithm update. Among other changes, it introduces Core Web Vitals (CWV), a new set of page experience signals that will affect how websites rank in the search results.

Savvy website owners and marketers are paying close attention to the changes. There’s definitely plenty to unpack when it comes to CWV.

In this article, we’ll look at some key lessons that marketers need to learn from the new update, which signals really matter most to Google, and how much your website’s user experience (UX) has evolved in importance since the days of keyword stuffing.

Google’s Core Web Vitals in a Nutshell

CWV consists of three primary criteria that Google uses to measure how users experience your page. Yes, Google is now looking beyond your content to judge your website according to its UX as well.

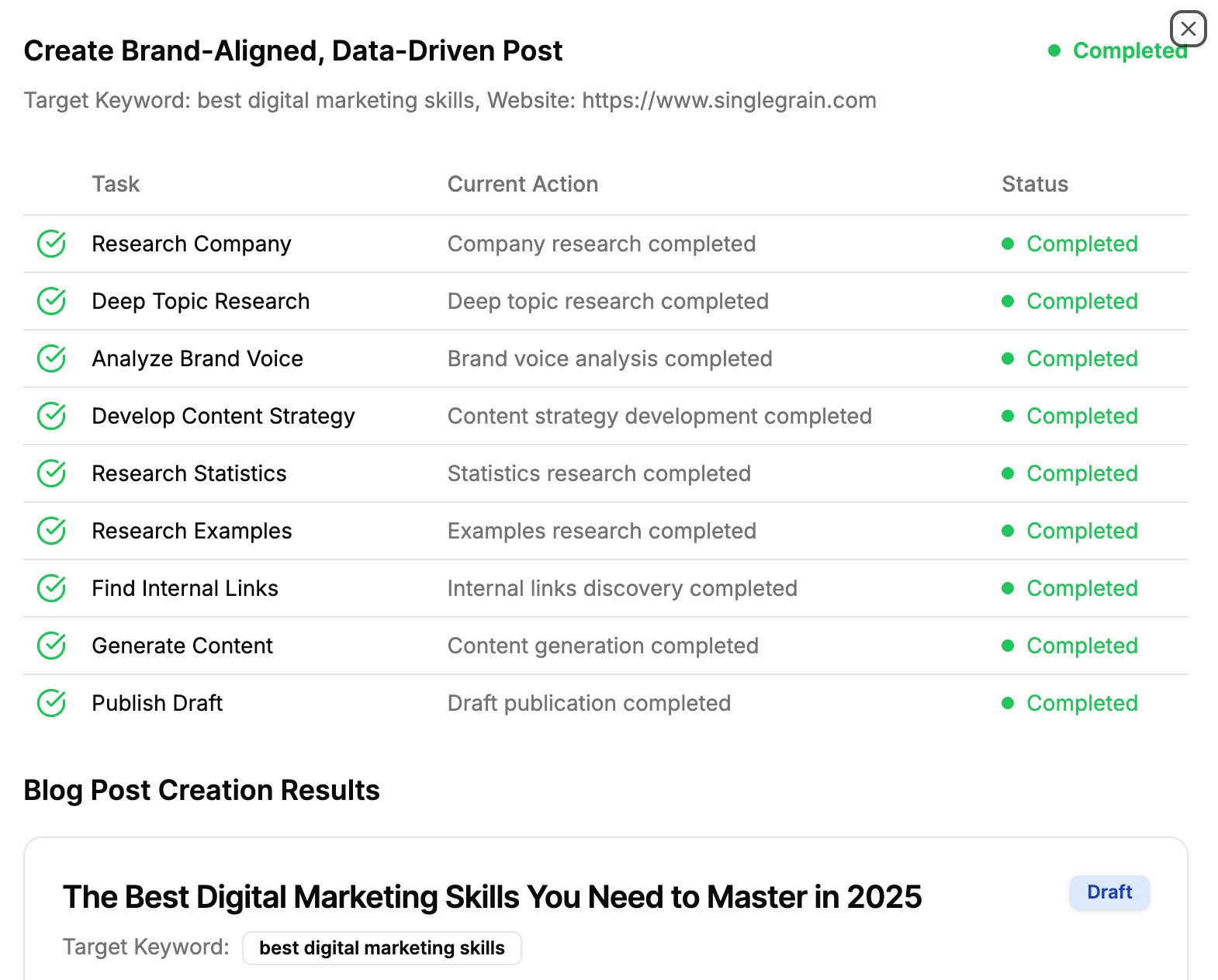

The CWV criteria are:

- Largest Contentful Paint (LCP), or how long it takes for a page to load. This is measured by how long it takes for the largest element visible on the page to load, whether that’s a video, an image or a chunk of text. LCP has to take no longer than 2 seconds to qualify as “good”, and 2.5 seconds to be considered “acceptable.”

- First Input Delay (FID), or how much time passes between a user clicking on a page element, and the element beginning to respond. It’s measured in milliseconds (MS), with “good” considered to be 100 MS or less.

- Cumulative Layout Shift (CLS), or how stable the page elements are. Page elements shouldn’t move around unexpectedly during or after rendering at all.

In addition, the new update pays attention to whether or not sites include HTTPS-security capabilities, safe browsing protection, mobile friendliness, and how successfully they block intrusive interstitial ads.

In other words, Google is judging your site on its performance and security, which are really subsets of UX.

Not only do these elements affect your site’s ranking on the SERP, but you’ll need to meet a minimum CWV threshold to appear in Google’s Top Stories.

While all of this might sound like a sweeping change, it’s really just a continuation of Google’s long-time wish to guide searchers to the most appropriate sites, in terms of content, intent, security and UX.

You can see the consistent trend when you look at a brief history of Google search:

- 2011: The Panda update prioritized rich, quality information that answers user questions.

- 2014: E.A.T. guidelines, or Expertise, Authority and Trust. This covered content and website credibility, which includes things like site structure and security as well as off-page indicators like reviews, testimonials, and expert guest posts.

- 2015: The mobile update for mobile UX, including responsive design, mobile-friendly page structures, and mobile formatting.

- 2018: Google’s mobile-first indexing required mobile sites to provide structured data, high page speed and performance, and the same content as desktop sites.

In this context, CWV is just continuing the trend.

Book My Free Marketing Consultation

What Lies Behind Google’s Algorithm Updates?

For a long time, UX has been considered an onsite, internal issue, but Google’s updates show an increasing desire for seamless UX, creating a single integrated internet experience that stretches from search to conversion.

Google essentially wishes to connect their product (search) with your product (content). The company frames it as a desire to deliver the best experience possible for internet users, guiding them to the site that best meets all their needs: security, performance, mobile friendliness, and relevant content.

Don’t forget, though, that Google can still track what happens after someone leaves the search results and lands on your web page. Virtually all of us are logged in to Google when we search, and virtually every website has Google Analytics installed. While Google has stated many times over the years that bounce rate isn’t a direct ranking factor, the use of UX as a guiding principle is still in its infancy.

How quickly and smoothly your page renders is just the beginning. It’s the content relevance element that warrants closer attention.

Google has been consistently investing in new AI tech that improves its ability to understand the semantics behind both the content on your site, and the intent of the user.

If Google’s search algorithm is truly going to be able to recognize the difference between keyword stuffing and content relevance, or between black hat link-building and true domain authority, then it needs the ability to understand and vet the meaning of the content you publish:

That’s why the company has developed new tools like:

- Language Model for Dialogue Applications (LaMDA) to help AI systems hold more conversational dialog, and

- Multitask Unified Model (MUM), which has a much larger neural network than BERT, the previous tool, and is 1,000 times more powerful.

MUM boosts understanding of human questions and improves search, and Google intends to use it to produce instant answers to even more complicated questions. Ideally for website owners, they’ll put the two together to direct the right user to the right site, but if they use their power for the bad, they could steal your content and your clicks.

For example, someone preparing to hike two different mountains wants to know how to prepare for each climb, so MUM may draw information from multiple websites to produce a single on-SERP answer, instead of sending the user to more than one site.

In fact, this is the very example Google itself gave when demonstrating MUM’s power:

Indeed, some thought leaders are increasingly nervous that Google is using UX issues as a pretext to retain more traffic for its own properties, instead of behaving as a search engine and directing searchers to third-party publishers and sites.

Learn More:

* What Is the Google BERT Search Algorithm Update?

* How to Prepare Your Site for the New Google Page Experience Update

* Google Privacy Sandbox: What Does It Mean for the Future of Targeted Ads?

* 10 Most Important Google Ranking Factors (& How to Optimize for Them!)

The UX of Zero Clicks

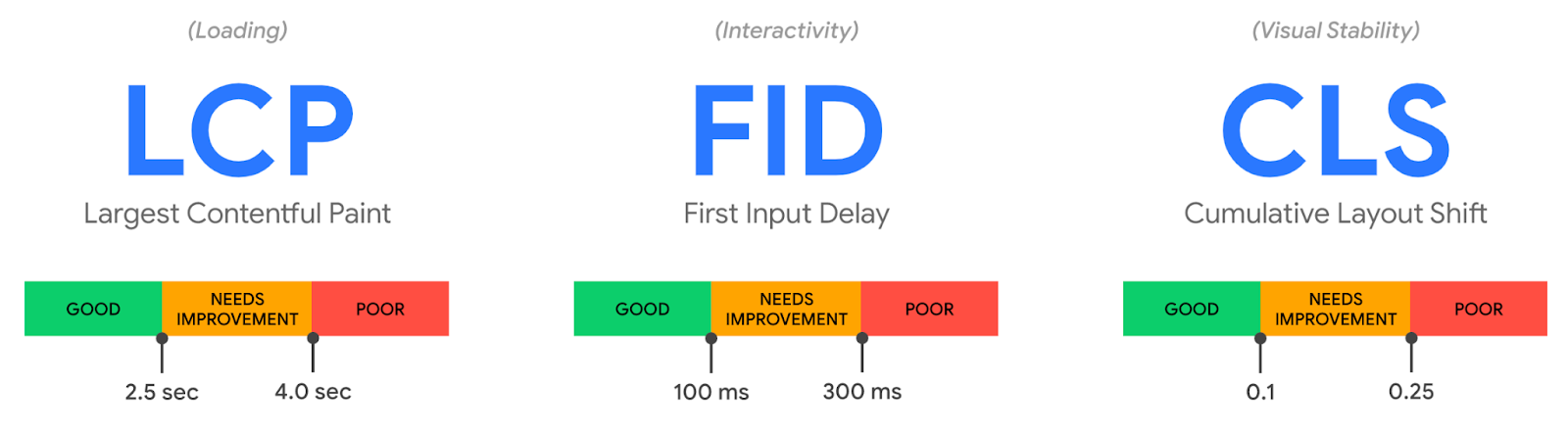

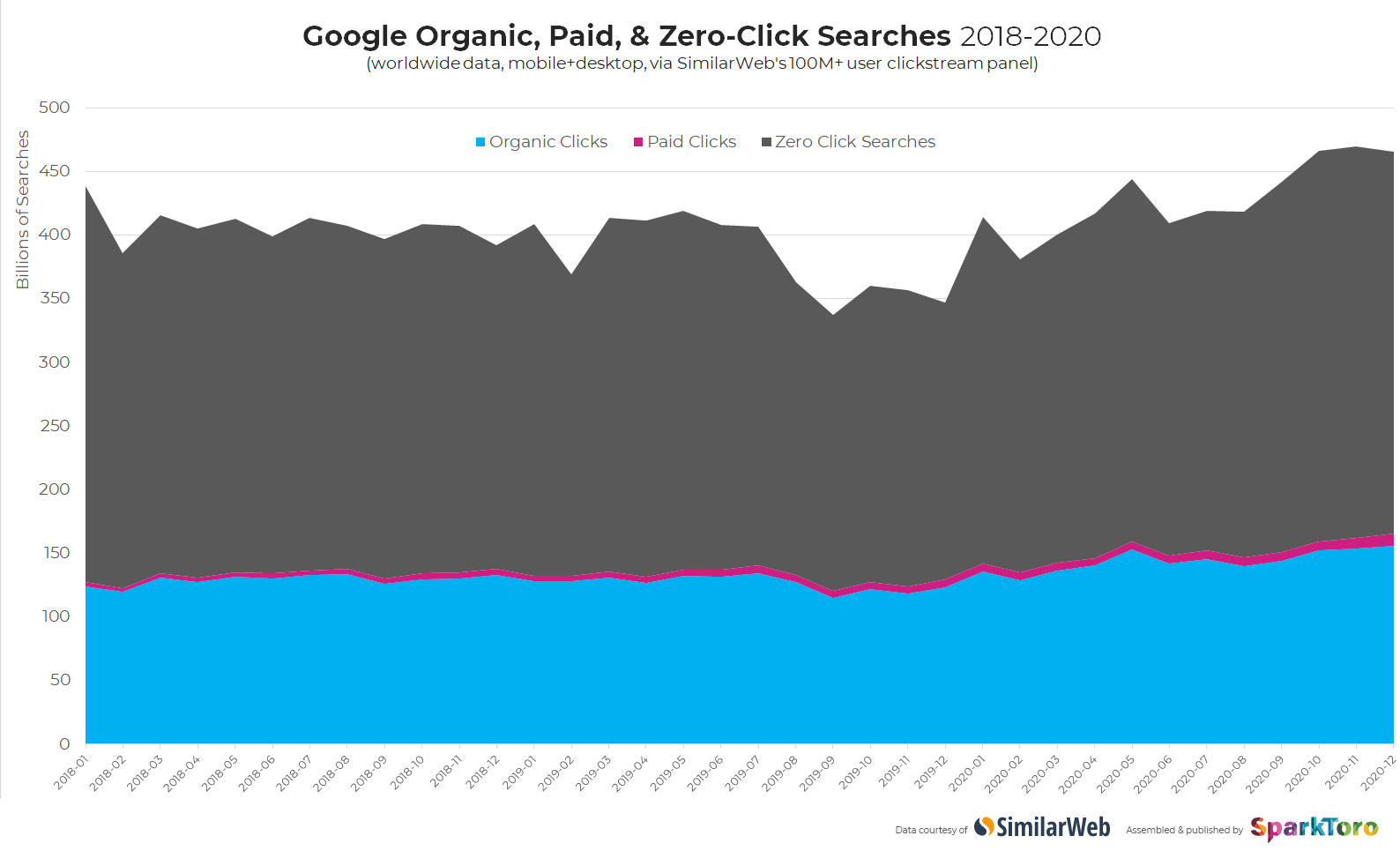

By investing in a true understanding what the content and overall experience on third-party websites is all about, Google is also expanding its own knowledge graph. Critics argue that the stronger Google’s knowledge graph gets, the less of a chance independent content publishers have of acquiring traffic. As proof, they point to the rising number of “zero-click” searches:

Google uses SERP features like “answer boxes,” “local packs” and “knowledge panels” to point out information, aggregated from third-party sources, that its algorithms believe will satisfy what searchers are after.

But with an ever-increasing variety of feature types, and with these features displaying an increasing depth of information, Google is basically negating the need to click through.

According to a study from Rand Fishkin’s SparkToro in 2019, 50.33% of desktop searches in the U.S. ended without a click through. By 2020, the number of zero-click desktop and mobile searches had hit 64.82%, although the final total is probably higher because that study didn’t include voice search:

“By displaying ads or its own products, Google can extract value from zero-click searches, while other sites might not,” observes George Nguyen, editor of Search Engine Land. “This can be especially troublesome considering Google sources much of the content that appears on its results pages from publishers, and as the proportion of zero-click searches increase, publishers may be losing out on traffic.”

There are also (well-founded) fears that Google is using its search dominance to take over specialist search services. For example, over 130 accommodation, travel and job vacancy firms, including TripAdvisor, Expedia and Trivago, wrote a letter of complaint to the Executive Vice-President & Commissioner for Competition of the EU, asserting that:

Google leverages “its unassailable dominance in general Internet search …to gain a competitive head start.”

Digital Sharecropping, the Knowledge Graph, and CWV

Mind you, the situation is very different for large corporations than it is for small businesses and independent blogs.

By targeting long-tail search terms, you’re less likely to compete with these big dogs to have your content surface, and Google is less likely to serve up those information-rich SERP features that keep people from digging deeper.

It’s also definitely still worthwhile to optimize your site for CWV (more on this below). Whether or not you actually have a chance of ranking for a high-volume, high-competition keyword, you still do want your visitors to have a good experience if and when they do arrive, no matter how you measure the quality of that experience.

And yes, it’s always a good idea to keep your acquisition channels as diverse as possible, so you’re never fully dependent on one platform that you’re not in control of. Remember the great Facebook organic reach apocalypse of 2018? That’s why so many marketers are favoring email nowadays.

After all, Google already monopolizes the search market and online ad market, and is heading towards fully taking over the digital ad market.

In 2020, Google held over 91% of the global search engine market, and controlled over 95% of search advertising and over 50% of display ads in the U.S.:

Google’s plans to replace cookies with its proprietary FLoC (Federated Learning of Cohorts) system will basically give it total and final ownership over user data and thus online advertising.

True, there’s little you can do to prevent Google from expanding its empire, but you can use these insights to create seamless UX that optimizes your site to rank high in the SERPs.

Book My Free Marketing Consultation

What the New Update Means for Your Site

If your site’s been performing well so far and the bounce rate has been low, you’re unlikely to see much change in the next few weeks. Even though you likely haven’t been measuring CWV metrics, it’s clear that your site is already delivering good UX, so you should score okay on the new algorithm.

The page experience ranking update is rolling out gradually over the summer, so you’ll only see full impact towards the fall.

But as competing sites adjust to the new update and clean up their act to meet its requirements, you might find it more challenging to qualify for competitive keywords. It’s a good idea to audit and revamp your site with CWV in mind, to make sure that you meet Google’s UX ideals.

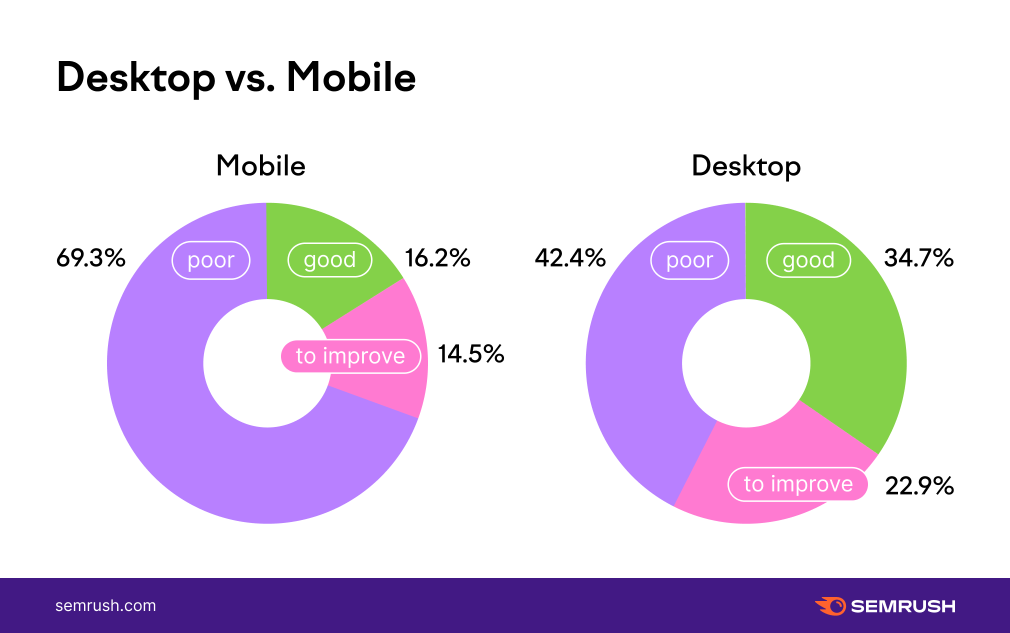

A February study by SEMrush measured CWV metrics for 78,000 top-ranking desktop pages and the same number of top-ranking mobile pages. The researchers discovered that most pages ranked “good” for each core web vital metric individually (60.8% passed for LCP, 59% for CLS, and 74.9% for FID), but just 34.7% were considered “good” for all three together.

This shows that most websites deliver decent UX, but fewer offer a user experience that fully reads Google’s mind:

Admittedly, testing your UX is a little tricky right now. Google uses real-world, real-time, real-user data to rank sites. You can’t get that kind of info when you run your tests, which will always be skewed by the specifics of your connection.

You can run lab testing before your site goes live, and field testing once it’s up and running. Lab testing means you can fix problems before they affect users, but it doesn’t provide a full picture of how the site will operate in real-world conditions; for that, you need field testing.

For example, lab tests sometimes use Total Blocking Time (TBT), as a proxy measure for First Input Delay (FID), because FID measures real user interaction which can’t be replicated in the lab.

TBT measures how much time passes while the website is blocked from user interactions, but that’s not a reliable replacement. FID is affected by the speed of user processors as well as the size of your javascript bundles.

Likewise, LCP can be dragged down by a client server that’s slow to respond, or slow rendering on the client side, so you might miss it in lab tests.

CLS is especially complex. In the real world:

- device screens are different sizes so CLS can vary

- personalized content like injected ads and the impact of ad blockers means different users see different things

- CLS covers the entire life of the page, not just stability on first page load. That means if a user scrolls down, layout could shift, but a CLS tool wouldn’t reflect that

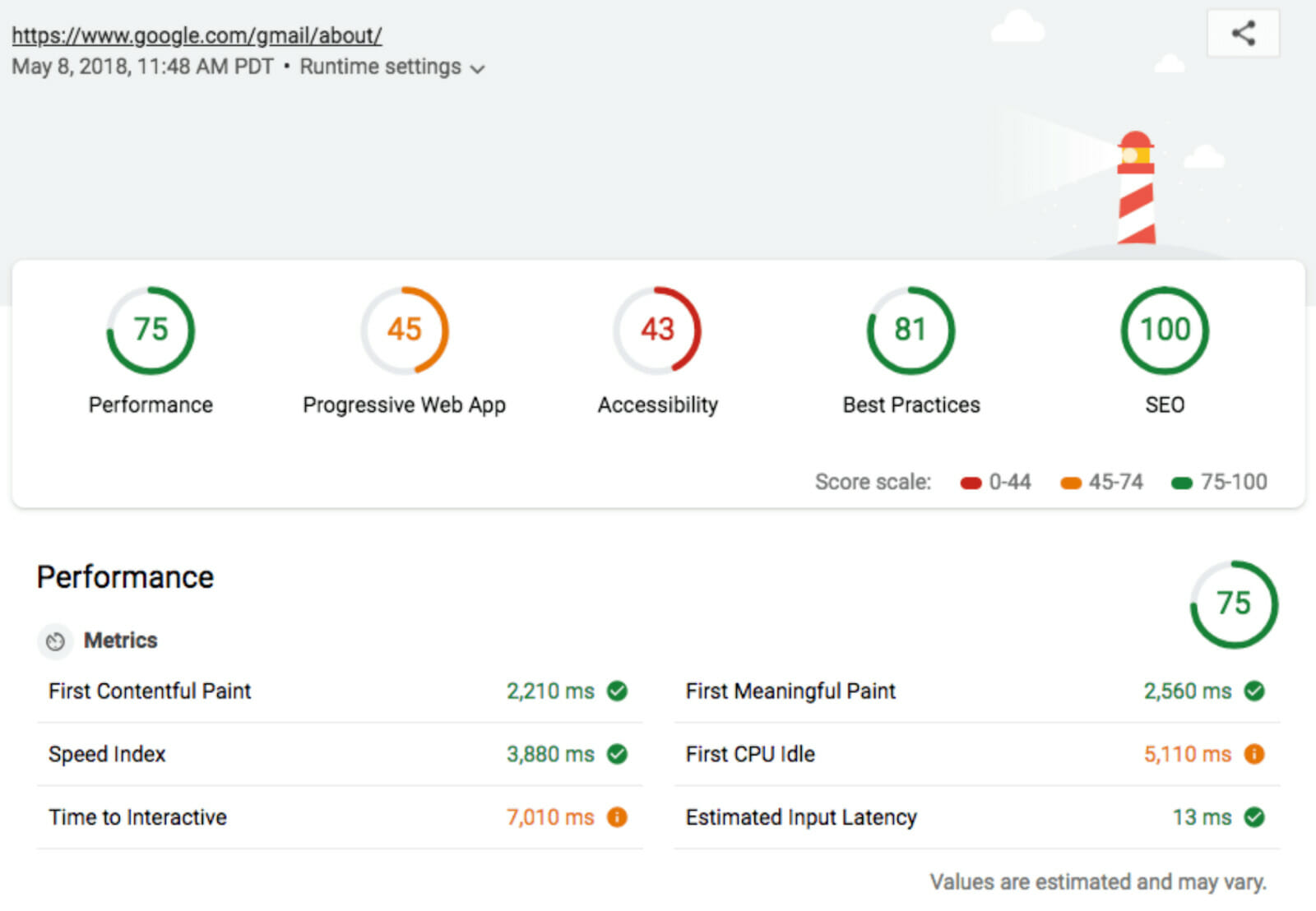

Many people recommend using Lighthouse 3.0 to test for LCP, TBT and CLS, because it mirrors Google’s testing process. However, Lighthouse still includes outdated metrics that aren’t relevant to CWV, like First Contentful Paint (FCP), and the overall score doesn’t reflect the new algorithm, although this will probably change soon.

Field testing relies on tools like:

- Google PageSpeed Insights, which shows all 3 CWV metrics

- Chrome Web Vitals extension for desktop

- Core Web Vitals report from Google Search Console

These should be more accurate, but can still be confusing because, again, UX differs so much according to user conditions, such as between a mobile user in rural Montana and a desktop user in New York City.

Additionally, each of the aforementioned tools has its own drawbacks: the Chrome Web Vitals extension measures in real time, but doesn’t give recommendations for what to improve, while the Core Web Vitals report does give detailed recommendations, but takes around 30 days to reflect recent changes.

Dive Deeper: 7 Mistakes in UI and UX That Are Costing You Engagement

How to Optimize Your Site for Google’s Evolving UX Requirements

1) Cut Out Anything That’s Not Necessary

The more elements, tools, plugins, etc. running on your site, the more they’ll drag down page speed, so keep them to the bare minimum. For each one, ask yourself:

- What is your goal for this page?

- How does each element serve that goal?

Then ruthlessly cut every unnecessary element.

It’s also important to reduce CSS bloat. Use a tool to search for unused CSS rules and remove them all.

2) Don’t Add Plugins Unless You Have To

Use existing tools and plugins as much as possible. If you’re about to add a new plugin or tool, stop and ask why you can’t use an existing one. Each extra element slows your site a little more.

3) Use Clean Themes

On platforms like WordPress, choose themes with clean code. It might be time to change your theme, if you’re using one that’s old and requires lots of patches to gain the functionality you need.

Research themes as thoroughly as you’d research a tech partner, because that’s what they are. If you’re using a custom page layout builder, make sure it doesn’t use bloated code. For example, ahead of the CWV update, Elementor updated its platform to remove a handful of wrapper elements, reducing the size of its document object model (DOM) output.

4) Optimize Media Elements

Heavy media elements are among the biggest culprits of a slow-performing site. Use CDNs, a third-party video host, a plugin to compress images thoroughly, and/or host your heavy media externally and embed the content.

5) Use Lazy Loading

Lazy loading is a great way to speed up time to LCP. Lighthouse, unlike other speed checkers, looks at real-world website performance rather than total download size and time to load, so lazy loading helps drive a positive UX.

Book My Free Marketing Consultation

Staying One Step Ahead of the Google Update

Google’s latest CWV update continues the move to place UX front and center as part of the search experience.

Whether Google’s intentions are to do a more accurate job of bringing the right users to the right sites, or to hijack traffic by joining user and content on the SERPs itself, the overall aim is to build a seamless user experience that unites search and content.

Understanding this goal helps you take concrete steps to diversify your acquisition channels and optimize your UX so that it fits into the greater user journey, as well as providing a positive on-site experience.