How E-E-A-T SEO Builds Trust in AI Search Results in 2025

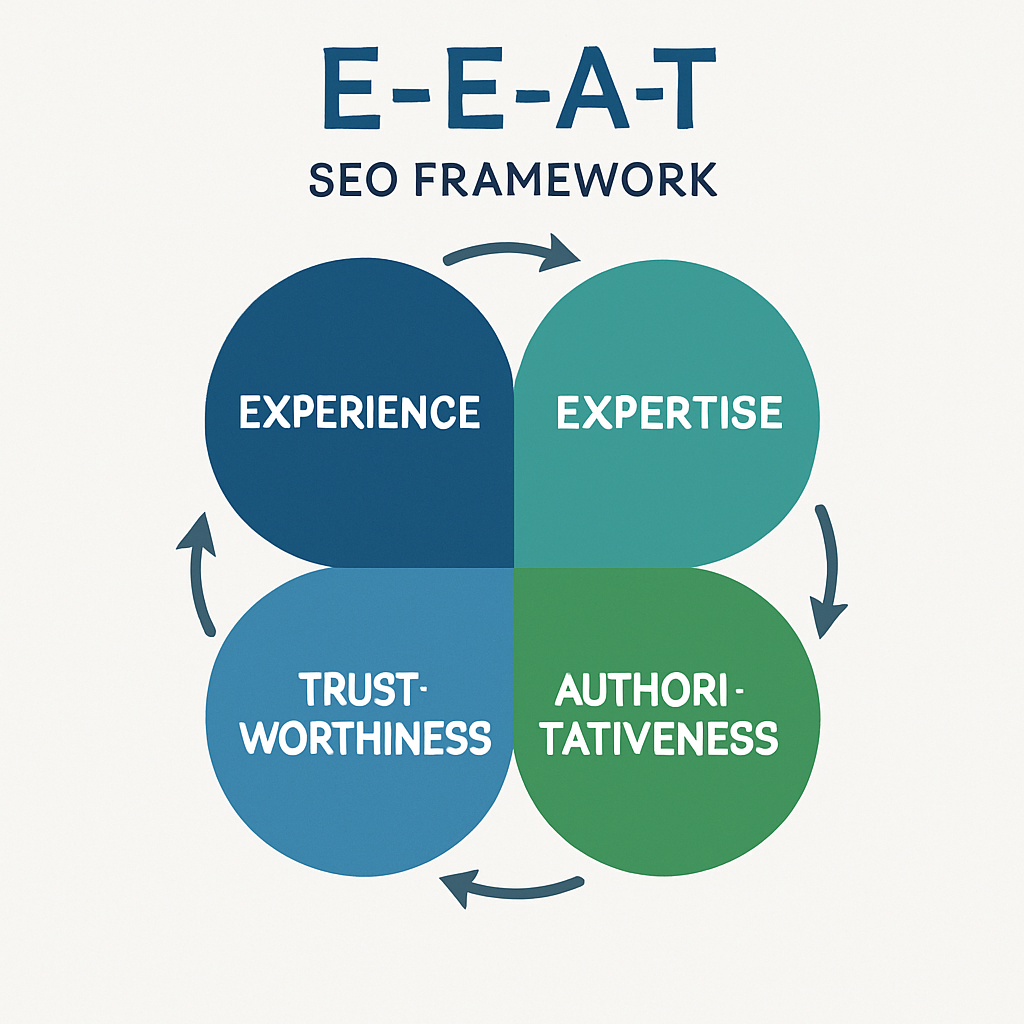

E-E-A-T SEO is now the difference between being summarized accurately by AI and being ignored. As answer engines compress sources into a single response, models lean on clear signals of Experience, Expertise, Authoritativeness, and Trustworthiness to decide which pages shape the final output. If your signals are weak or invisible to machines, your insights get stripped out in the synthesis.

This guide shows how to build verifiable trust signals that AI systems can parse, map them to your workflows, and measure their impact. You’ll get a practical framework for AI-era governance, step-by-step implementation guidance, and metrics that prove your trust investments pay off in visibility and revenue.

TABLE OF CONTENTS:

Why E-E-A-T shapes trust in AI‑generated answers

Answer engines reward sources they can verify and explain. In a world where users see synthesized responses first, models need to know not just what you say, but who said it, how they know it, and where the data came from.

Adoption is rising fast, which amplifies the stakes. According to the Deloitte Connected Consumer Survey 2025, 53% of U.S. consumers are experimenting with or regularly using generative AI for search and discovery, up from 38% in 2024. As usage grows, unverified answers erode trust and engagement.

Credibility hinges on visible sourcing. A Pew Research Center analysis found that 58% of Americans trust information from news organizations that disclose authors and sourcing “a lot” or “somewhat,” compared with just 24% for social platforms. Similar dynamics apply inside AI summaries: sources with explicit authorship, citations, and editorial standards are preferred inputs.

From ten blue links to single‑sentence answers

Classic SEO optimized for position and CTR; AI search optimizes for inclusion and influence. Your goal is not only to rank but to be quoted, cited, and used to ground the model’s response. Content that fails to expose machine-readable provenance often disappears behind an AI veneer—accurate but source-anonymous.

Machine‑readable trust signals models can parse

Trust in AI discovery is built on structured evidence: named authors with relevant credentials, concrete first-hand experience, clear citations to primary sources, and stable publishing practices. Schema markup, consistent bylines, date-stamped updates, and source-type labeling are the connective tissue that lets models evaluate E-E-A-T at scale.

An evidence‑based framework to operationalize E‑E‑A‑T

The shift to AI search favors teams that treat E-E-A-T as an operational system rather than a set of page-level tweaks. Map each element to specific processes, metadata, and QA checks to make credibility repeatable.

Experience signals you can prove

Demonstrate first-hand knowledge with concrete artifacts: data you collected, experiments you ran, product screenshots, and outcome details. Expose the “how we know” layer through captions, methods sections, and on-page disclosures so models can attribute claims correctly.

Organizations that map every answer back to a named expert and disclose AI involvement on-page saw gains in user confidence; the Deloitte Insights transparency playbook highlights cases where session duration rose 12–15% after adding expert bios and source citations to pages that frequently appear in AI summaries. For a deeper, AI-specific perspective on tying experience to search visibility, study how E‑E‑A‑T in AI content drives 2025 SEO success.

Clarity about AI assistance is part of trustworthiness. State where AI was used (research synthesis, outline, draft); note human review; and specify data sources. If you’re evolving your disclosure model, this guide to practical transparency tactics in AI workflows can help teams converge on consistent patterns.

Expertise and oversight for AI‑assisted content

Involve credentialed subject-matter experts to supervise prompts, fact-check outputs, and approve assertions that influence decisions. Governance that mirrors E-E-A-T accelerates adoption: in the KPMG Global AI Report, 61% of executives cited difficulty proving the reliability and expertise of AI outputs as the top barrier, while organizations using E-E-A-T-like oversight were 2.3× more likely to deploy AI search tools company-wide and reported a 30% increase in internal trust scores.

Operationalize this oversight within a content QA system. Build roles and checks that guard against model hallucinations and subtle inaccuracies while preserving production speed.

- Domain lead: validates claims, approves methodology, and adds first-hand insights.

- Editorial QA: checks verifiability, citation integrity, and tone consistency.

- Data steward: ensures data lineage, units, and time frames are accurate and traceable.

- Risk and compliance: flags YMYL sensitivities and enforces jurisdictional guidance.

To make oversight scalable without slowing output, integrate an AI-aware QA layer. See AI content quality methods that actually rank for a step-by-step approach to fact-checking, evidence tagging, and structured metadata.

Authoritativeness beyond backlinks

Backlinks still matter, but AI summaries also value institution-level credibility: consistent expert coverage of a topic, references in reputable publications, and evidence of peer review. Make this machine-readable by using author entity profiles, an organization schema, and source-type labels (e.g., primary research, practitioner guide, case analysis).

Map your topic clusters to named experts and reinforce them over time. For strategic plays that elevate perceived authority and improve inclusion in AI overviews, review these E‑E‑A‑T strategies that reinforce Google’s trust signals.

Trustworthiness in the age of LLMs

Trust is the absence of unpleasant surprises. Publish your strategy, mark last-reviewed dates, include conflict-of-interest statements where relevant, and provide easy remediation paths for corrections. For generative content, disclose model versions, retrieval sources, and human review checkpoints.

Consistency across pages is crucial. Establish a disclosure style guide and monitor adherence through spot checks. Teams building these programs often adopt the patterns outlined in our analysis on transparency in AI to align legal, editorial, and UX decisions.

E‑E‑A‑T SEO in practice: an AI content QA workflow

Turn trust into a repeatable process using a layered workflow. Capture provenance as you create, not as an afterthought, and make every layer parsable by both humans and machines.

- Brief: define thesis, claims needing citations, and primary data sources before drafting.

- Draft: require in-text citations, author notes on first-hand experience, and flagged YMYL statements.

- Review: expert signs off on claims; editor validates citations; steward verifies data lineage.

- Publish: expose schema for author, organization, citations, and review dates; add disclosure of AI involvement.

- Monitor: track AI overview inclusion, citations, and engagement; queue pages with anomalies for update.

E‑E‑A‑T SEO implementation playbook for AI search

This playbook turns principles into day-to-day execution. Start with visibility diagnostics, then build the trust stack that earns inclusion in AI answers.

- Audit your “answer footprint.” Test priority queries in AI search experiences and note which pages are cited or summarized. Compare against traditional SERPs to spot mismatches.

- Map experts to topics. Assign each topic cluster to named practitioners with bios, credentials, and a backlog of experience-rich examples they can provide.

- Instrument provenance capture. Add fields in your CMS for methods, data sources, and first-hand proof, so writers can include them as part of the draft.

- Standardize disclosures. Define where and how you state AI use, review steps, and data lineage across all content types.

- Enforce structured data. Add author, organization, citation, and review schema. Keep dates fresh with genuine updates, not superficial edits.

- Harden your QA layer. Build prompts for internal validators to cross-check facts, units, and time frames; route flagged statements to experts for approval.

- Close content gaps surgically. Use competitive analysis to find topics where your experience and research are undersupplied in AI summaries. Platforms like Clickflow can help by using advanced AI to analyze your competitive landscape, identify content gaps, and generate strategically positioned content that outperforms competitors.

- Benchmark and iterate. Track inclusion rates in AI overviews, number of citations earned, and on-page engagement deltas after adding bios and citations.

- Build a refresh cadence. Prioritize pages that influence AI answers and schedule evidence updates and expert reviews quarterly.

- Expand to social and LLMs. Apply the same trust stack to social search, embeddings, and LLM retrieval so your content is preferred across channels.

Avoiding common AI Overviews pitfalls

Many teams chase superficial tweaks and miss structural issues. Thin author pages, missing citation schema, and vague disclosures are frequent blockers—especially for YMYL topics.

If inclusion lags despite good content quality, examine the issues outlined in why AI Overviews optimization fails—and how to fix it. Addressing these root causes often unlocks the citations needed for consistent visibility.

Measurement: Proving trust signals move the needle

E-E-A-T SEO becomes defensible when it’s measured. Align KPIs with the outcomes AI systems and users reward: reliable inputs for models and higher engagement for humans.

AI answer visibility KPIs

Track inclusion and influence across AI surfaces to validate that your trust stack is working. Use a consistent query set and monitor movement over time as you roll out changes.

- AI overview inclusion rate: percent of tracked queries where your domain is cited or summarized.

- Citation depth: number of sections or bullets in the AI answer that reference your content.

- Attribution clarity: frequency of named author references or explicit brand citations in AI responses.

- Entity alignment: consistency of author and organization entities recognized across pages.

On‑page trust metrics and experimentation

On-page behavior reflects perceived credibility. Test the incremental impact of bios, citations, and disclosures on engagement, especially for pages that appear in AI summaries.

- Session duration and scroll depth deltas after adding expert bios and methods sections.

- Outbound citation clicks to primary sources as a proxy for research credibility.

- Feedback rate on corrections or clarifications, signaling trust in remediation.

- Conversion-rate lift on YMYL pages after implementing disclosures and QA checklists.

Governance maturity matters. As mentioned earlier, organizations that mirrored E-E-A-T in their AI oversight were significantly more likely to deploy AI search at scale and saw higher trust scores, per the KPMG Global AI Report. Build your measurement plan so leadership sees progress and reinvests accordingly. When prioritizing your roadmap, focus first on pages with high business impact and a realistic shot at AI inclusion.

Turn E‑E‑A‑T SEO into your AI‑search advantage

AI search rewards content that proves what it knows and shows how it knows it. Operationalizing E-E-A-T SEO entails experience you can validate, expertise you can name, authority you can structure, and trust you can measure. As a result, you earn consistent inclusion and more persuasive AI citations.

If you want a partner to build this trust stack end to end, from governance to AI overview visibility, get a FREE consultation with Single Grain. We’ll align E-E-A-T signals with GEO/AEO, instrument the right metrics, and turn your expertise into measurable wins across AI and classic search.

Frequently Asked Questions

-

How should I budget for E-E-A-T improvements in AI search?

Group investments into three buckets: content provenance (author bios, citations, disclosures), technical infrastructure (schema, CMS fields, validation), and governance (expert review, QA time). Start with low-lift, high-impact fixes—like reusable disclosure components—before funding research-driven content and expert interviews.

-

How do I adapt E-E-A-T for multilingual or region-specific content?

Localize author entities and credentials, not just translations—use region-relevant titles, professional IDs, and regulatory references. Implement hreflang and region-specific bylines, and map local experts to topics so AI systems can recognize authority within each market.

-

What’s a practical approach for small teams to implement E-E-A-T?

Create a minimum viable trust stack: a single expert bio template, a standard methods/citations block, and a short review checklist. Use an advisory board or rotating SME office hours to inject expertise without slowing production.

-

How should we handle user-generated content without hurting E-E-A-T?

Moderate UGC with clear contributor tiers (verified practitioner, customer, community) and display badges and disclosure labels. Add rel attributes and schema for Q&A where appropriate, and selectively noindex low-signal threads while featuring curated expert responses.

-

What belongs in a correction and incident response policy for AI-era trust?

Maintain a public changelog with timestamps, what changed, and who approved it; link corrections to the original claim. For material updates, show an inline notice, notify subscribers, and update structured data so AI systems can register the revision.

-

How do I evaluate tools and vendors that support E-E-A-T workflows?

Prioritize systems that capture provenance at creation (methods, sources, reviewers), expose audit trails, and support granular schema out of the box. Look for API access, role-based permissions, and security certifications (e.g., SOC 2) to integrate with your QA and compliance processes.

-

When should I expect E-E-A-T upgrades to influence AI Overviews?

Technical signals can be recognized within weeks after recrawl, while authority and expert consistency typically compound over 2–3 refresh cycles. Early green lights include more precise brand or author mentions in AI answers and increased alignment of recognized entities across pages.