How AI Agents Influence Agency Selection Committees

AI agency selection is no longer driven solely by human instincts, past relationships, and polished pitch theater. Across marketing, procurement, and legal, AI agents now pre-screen vendors, summarize complex proposals, forecast outcomes, and shape which agencies even make it into the room. If you sit on a selection committee or want to be selected, understanding how these agents work is becoming mission-critical.

This article unpacks how AI agents influence every stage of agency selection, from defining requirements and building longlists to scoring RFP responses, negotiating contracts, and monitoring performance. You will see how committees can design an agent-assisted framework, which tools and governance practices matter most, what risks to watch for, and how to measure whether AI is actually improving your decisions rather than just speeding them up.

TABLE OF CONTENTS:

Strategic Shifts in AI Agency Selection Committees

AI agents are software entities that can autonomously perform multi-step tasks, such as gathering information, running analyses, and drafting recommendations, based on high-level goals you set. Unlike a single chat prompt, they operate over longer workflows, orchestrating different models and data sources to support complex decisions. In the context of agency evaluation, that means handling many of the time-consuming, data-heavy tasks that used to bog committees down.

Agency selection committees are typically cross-functional teams that include marketing leaders, procurement, finance, legal, and, sometimes, HR or IT. Their job is to balance performance potential, cost, risk, and cultural fit while maintaining transparency and governance. Historically, they relied on manual research, stakeholder referrals, and spreadsheet scorecards to compare options, which made the process slow and susceptible to bias.

79% of companies have already adopted AI agents, meaning many organizations now have the infrastructure to deploy agents to vendor and agency decisions. Once agents begin pre-processing market data, proposals, and historical performance, they effectively reshape which agencies are considered, how they are evaluated, and how confidently committees can defend their final choices.

This shift does not remove humans from the loop; instead, it changes where human judgment is applied. Rather than spending weeks collecting information and manually normalizing it, committee members can focus on interpreting AI-generated insights, challenging assumptions, and probing for soft factors such as chemistry, innovation mindset, and brand alignment.

From Manual Shortlists to Agent-Assisted Decisions

In the traditional model, agency shortlists grew out of word-of-mouth, incumbent relationships, and whoever happened to surface through ad-hoc research. Stakeholders assembled decks, scoring spreadsheets, and email threads by hand, often under intense time pressure. The quality of the shortlist was heavily dependent on who knew whom, which meant promising but less visible agencies could be overlooked.

Spreadsheet-based scorecards improved transparency but still relied on humans to enter and interpret every data point. Committees often struggled to compare like with like across pricing models, service scopes, and success metrics.

With agentic workflows, AI agents continuously crawl public information, normalize terminology across proposals, and map each agency’s offering back to your defined requirements. As explored in detail in guidance on how agentic AI is revolutionizing digital marketing, these systems can string together tasks like research, extraction, clustering, and scoring into a coherent pipeline that feeds your committee with structured comparisons rather than raw noise.

The table below illustrates how the evolution from manual to agent-assisted approaches changes the character of agency selection work.

| Approach | Process Characteristics | Strengths | Limitations | Where It Fits Best |

|---|---|---|---|---|

| Informal, human-only selection | Unstructured research; heavy reliance on relationships and referrals | Fast for small spends; leverages existing trust | Opaque, biased, hard to audit; poor coverage of market options | Low-risk, tactical projects |

| Manual structured scoring | Spreadsheet scorecards; manual data entry from proposals | More transparent; repeatable criteria | Labor-intensive; inconsistent data quality; slow to update | Mid-sized engagements with modest vendor pools |

| AI-augmented selection | LLMs assist with summarization; humans orchestrate tasks | Faster digestion of documents; better comparability | Still fragmented workflows; quality depends on individual prompts | Teams exploring AI, but without full automation |

| AI-agent-orchestrated selection | Agents automate research, extraction, and initial scoring with human approvals | Significant time savings; consistent application of criteria; strong audit trail | Requires governance, tooling, and change management | Strategic, high-stakes agency partnerships |

Key Touchpoints for AI Agents Across the Selection Journey

Because AI agents can coordinate multiple steps, it helps to think in terms of the full agency selection lifecycle. At each stage, agents can take on different roles: analyst, researcher, forecaster, or compliance checker. Deliberately mapping these touchpoints prevents “shadow AI” experiments from emerging in silos without oversight.

Typical stages where agents can add value include:

- Clarifying requirements and success metrics based on historical performance and stakeholder input

- Scanning the market to build a longlist of relevant agencies and validating their capabilities

- Drafting and tailoring RFPs or briefs for different agency categories

- Ingesting and normalizing proposal data into a unified schema

- Scoring responses against weighted criteria and surfacing trade-offs

- Analyzing pitch meetings, Q&A sessions, and reference calls

- Monitoring post-selection performance relative to forecasted outcomes

Later sections will walk through these stages in more detail, but the key idea is straightforward: AI agents should handle high-volume, repeatable cognition so committees can reserve their energy for nuanced judgment calls and relationship building.

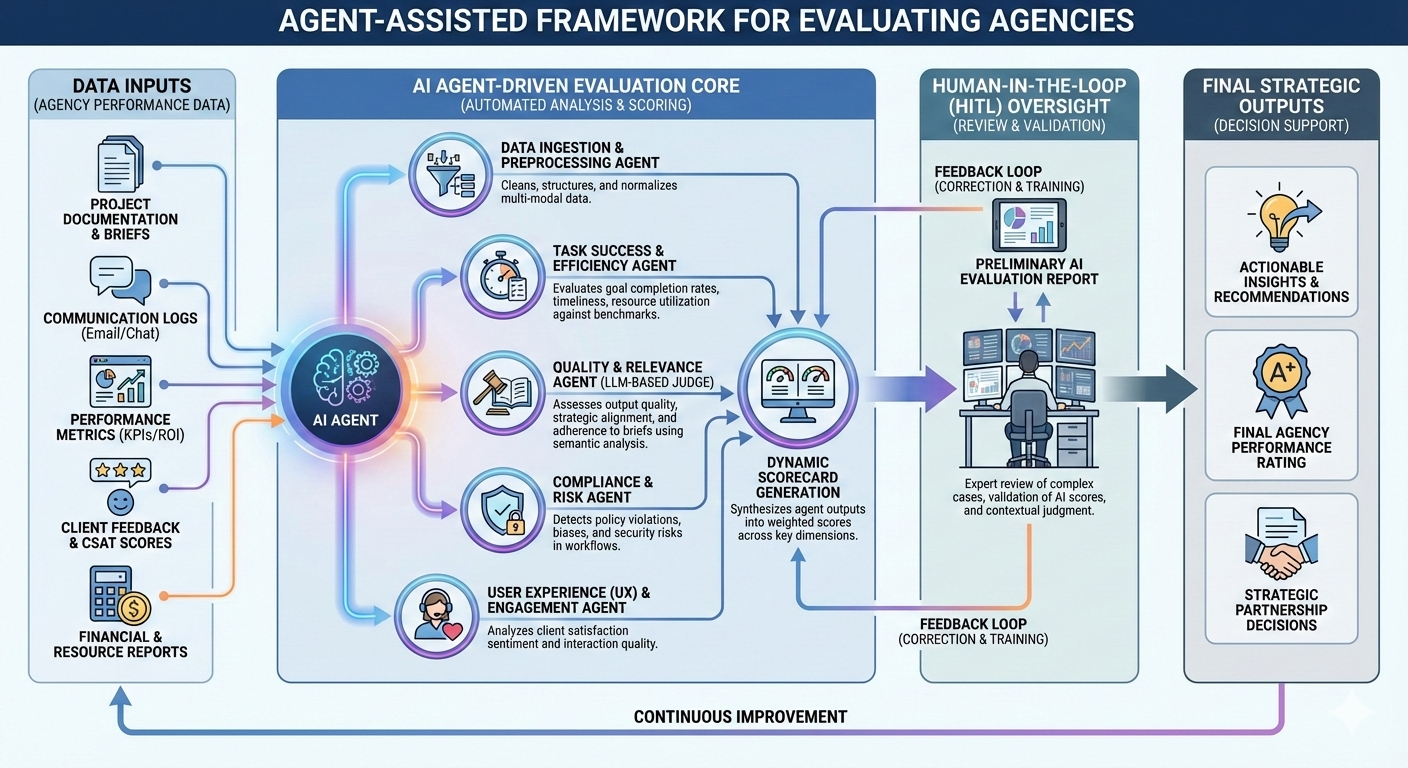

Agent-Assisted Framework for Evaluating Agencies

To make AI agency selection rigorous, committees need a repeatable operating model. An agent-assisted framework defines which decisions remain human-only, which tasks are delegated to agents, what data those agents can access, and how outputs feed into final approvals. Done well, this framework shortens decision cycles while improving fairness and traceability.

Early Discovery and AI Agency Selection Inputs

The first step is clarifying what “good” looks like for your next agency partnership. AI agents can mine internal data (past campaign performance, channel mix, win/loss analyses, and even qualitative feedback from stakeholders) to propose a draft set of objectives, KPIs, and constraints. Committees can then refine these into a clear brief that will later drive scoring rubrics.

Next comes market scanning. Agents crawl public data to assemble a longlist of agencies that match your requirements for geography, vertical expertise, service mix, and AI maturity. Organizations that already invest in advanced AI for marketing understand how the same modeling capabilities used to segment audiences or generate creative can be repurposed to analyze vendor positioning, case studies, and thought leadership.

To avoid reinventing the wheel, your agents might start from curated, human-generated benchmarks, such as independent overviews of top AI marketing agencies in 2025, and then expand that set with additional candidates discovered via web search, databases, and social signals. The agent’s role is to standardize basic attributes (size, focus, tech stack), identify red flags, and cluster similar agencies so your committee sees a structured framework rather than a chaotic list of names.

Throughout these early stages, humans stay responsible for finalizing requirements, setting diversity or sustainability goals, and confirming that no promising challengers have been excluded due to sparse digital footprints. The goal is to combine breadth of coverage with deliberate, documented judgment.

RFPs, Proposal Scoring, and Shortlist Decisions

Once you know what you are looking for, AI agents can help you generate tailored RFPs or briefing documents. Based on your goals, they can propose weighted evaluation criteria, detailed question sets, and standardized response formats that make scoring much more reliable. This is where aligning your RFP with an overarching AI marketing strategy becomes crucial, ensuring you ask agencies to demonstrate not just creative talent but also data, experimentation, and governance capabilities.

Some large enterprises have piloted “superassistant” AI agents that automatically gather proposal data, benchmark agency performance, simulate scenarios, and draft ranking recommendations routed through human approval gates. They report materially faster decision cycles and more uniform scoring across business units, freeing committee members to focus on strategic trade-offs and risk rather than on data wrangling.

In a similar vein, your own agents can extract pricing structures, normalize KPIs, and highlight where agencies have glossed over specific requirements or made unusually aggressive performance claims. They can cross-check case study results against external data, summarize reference feedback, and flag inconsistencies that deserve live questioning during pitches. The committee then uses these structured insights to build a shortlist that reflects both potential and areas needing deeper investigation.

Crucially, AI-generated scores should never be treated as final verdicts. Instead, they act as a starting point for discussion, with clear visibility into which inputs drove each recommendation so that domain experts can challenge or adjust the results.

Pitches, Negotiation, and Performance Forecasting

During presentations and Q&A, AI agents can record and transcribe sessions, then automatically tag segments by theme, such as strategy, creative, measurement, and governance. They can produce neutral summaries of each pitch, highlight unanswered questions, and compare how consistently each agency articulated its approach across different stakeholders. This reduces the risk that a compelling anecdote overshadows gaps in operational detail.

For negotiation, agents can model different fee structures, scopes, and risk-sharing mechanisms, such as performance-based components or pilot phases. They can scan contract drafts line by line to surface non-standard clauses, potential liability, and misalignments with your internal playbooks, giving legal and procurement teams a focused list of issues to resolve rather than a dense wall of text.

Forecasting is another area where AI support is especially valuable. Agents can combine historical performance data with scenario modeling to estimate realistic outcome ranges under different agency proposals. Platforms such as ClickFlow, which specialize in rapid SEO experimentation and content testing, provide the empirical lift curves and test results that agents can use as inputs when stress-testing performance promises against what has actually worked in similar environments.

Selection committees should explicitly ask agencies how they use AI for planning, experimentation, and optimization, and whether they are prepared to operate within a data-rich, agent-assisted governance framework after the contract is signed.

Tools, Governance, and Roles for AI-Driven Agency Decisions

Introducing AI agents into agency selection is an organizational change that touches risk management, data strategy, and accountability. The committees that see the most benefit treat agents as part of a well-governed system that combines the right stack, clear ethics guidelines, and role clarity for every stakeholder involved.

Building the AI Tool Stack for Your Selection Committee

An effective tool stack for AI-augmented agency selection is modular, interoperable, and governed by strong data controls. Rather than looking for a single platform to do everything, committees typically assemble specialized tools that agents orchestrate behind the scenes.

Key functional categories include:

- Research and vendor intelligence: Tools that monitor agency websites, social presence, reviews, and industry news to maintain an up-to-date market map.

- RFP automation: Systems that template, version, and distribute briefs while tracking responses and deadlines.

- Proposal summarization and scoring: LLM-based tools that extract structured fields, compare narratives, and apply weighted rubrics.

- Pricing and ROI modeling: Models that simulate outcomes, compare fee structures, and tie projected impact to your funnel data.

- Risk and compliance review: Components that check data privacy, security, and regulatory alignment of both agencies and selection tools.

- Contract analysis: Legal AI that flags non-standard terms and ensures clauses align with internal policies.

- Meeting and pitch analysis: Transcription and analytics layers that summarize sessions and tag key insights.

When evaluating these tools, security and compliance should be first-class criteria. Agents that can integrate across these components (pulling from research tools, analyzing proposals, and feeding results into your procurement or CLM systems) provide the greatest leverage. However, that integration must be balanced with strict access controls, audit logging, and clear ownership to avoid accidental data exposure.

Governance, Ethics, and Human-in-the-Loop Controls

Without governance, AI agents can inadvertently amplify existing biases, favor incumbents with more data, or recommend agencies that raise ethical or brand-safety concerns. The goal is not only to get faster decisions, but also to make demonstrably fairer, more defensible ones.

The Harvard Division of Continuing Education has highlighted how organizations can pair AI-driven analysis with formal ethics policies and human accountability. In a Harvard Professional & Executive Education blog, they describe marketing leaders who improved campaign ROI and decision quality by coupling AI forecasting and shortlist generation with review boards and explicit transparency requirements for vendors.

For agency selection committees, practical governance measures can include:

- Documenting where and how AI agents are used at each stage of the process, including data sources and decision rights.

- Requiring explainable scoring models, so committee members can see which factors drove an agent’s recommendations.

- Setting human approval thresholds, especially for high-impact decisions such as excluding agencies or awarding contracts.

- Running periodic bias audits to check for systematic underweighting of smaller, newer, or minority-owned agencies.

- Restricting sensitive vendor documents to enterprise-grade, governed AI environments rather than consumer chatbots.

- Demanding clarity from agencies on their own AI governance, including how they protect your data and avoid harmful automation.

Legal and compliance teams should also weigh in on questions such as data residency, cross-border transfers, retention periods, and whether AI vendors act as processors or sub-processors under regulations such as the GDPR. Clarifying these points up front prevents painful renegotiations later in the selection process.

Role Guide: CMO, Procurement, Legal, and Finance

AI agents work best when they augment clearly defined human roles rather than replace them. Each function on the selection committee has distinct responsibilities in designing, supervising, and using agent-assisted workflows.

- CMO or marketing leader: Owns the strategic brief, defines success metrics, and ensures AI scoring weights reflect brand, creative, and customer experience priorities, not just short-term efficiency.

- Procurement: Configures vendor evaluation frameworks, manages RFx processes, and maintains the audit trail of how agents were used, including approvals and overrides.

- Legal and compliance: Approve AI tools from a regulatory standpoint, set boundaries on data usage, and define which contract provisions must constantly be reviewed by humans.

- Finance: Validates ROI models, ensures that AI-generated forecasts reconcile with budgeting and forecasting processes, and monitors whether realized performance matches financial assumptions.

- IT / Data teams: Implement and monitor the AI infrastructure, manage integrations, and track system performance, security, and reliability.

Committees can also borrow best practices from HR teams that already use AI in recruitment, such as separating screening and hiring-authority roles, providing candidates (in this case, agencies) with clarity on how AI is used, and creating channels for feedback when participants believe an automated process has mistreated them.

Metrics to Prove AI Agents Improve Agency Choices

To justify investment and maintain trust, you need evidence that AI agents are improving agency selection outcomes, not just adding techno-gloss to existing processes. That means establishing baselines, tracking changes over time, and tying selection decisions to downstream performance.

- Decision speed: Time from brief approval to signed contract, compared before and after deploying agents.

- Committee effort: Total hours spent on research, scoring, and coordination, with attention to how work shifts from manual data wrangling to higher-value analysis.

- Scoring consistency: Variance in scores across committee members for the same agency, indicating whether structured AI inputs are reducing noise.

- Performance vs. forecast: How closely actual campaign results align with AI-assisted forecasts used during selection.

- Supplier diversity and innovation: Share of spend going to new entrants or diverse-owned agencies, reflecting whether agents help surface non-incumbent options.

- Risk and compliance outcomes: Incidents related to data, brand safety, or contractual disputes arising from agency work.

On the marketing side, you can also tie selection quality to revenue and efficiency gains by analyzing whether new agencies outperform predecessors on key funnel metrics. Resources that dive into how AI marketing agents can maximize your ROI provide functional patterns for connecting AI-driven decisions to tangible business outcomes, which you can adapt to the vendor-selection context.

Turning AI Agency Selection Insight Into Better Partner Choices

AI agents now influence AI agency selection in ways most stakeholders never see directly, from quietly pruning longlists to spotlighting outlier proposals and simulating performance scenarios. Committees that recognize this shift and design intentional, governed agent-assisted workflows will make faster, more defensible choices than those clinging to purely manual methods.

For selection committees, the path forward is clear: define your requirements and ethics policies first, then deploy agents where they reduce friction and bias without displacing human judgment. Build a tool stack and governance model that treats AI as an accountable collaborator, and measure success through decision speed, outcome quality, and supplier diversity, not just automation for its own sake.

For agencies, the implication is that you are increasingly selling not just to people, but also through machines. Making your case studies, metrics, and methodologies machine-readable, being transparent about your own AI use, and backing claims with data from experimentation platforms such as ClickFlow will all help you stand out when agents sift through competing proposals.

If you want a marketing partner that already operates comfortably inside this AI-augmented world (optimizing for search engines, social platforms, and AI overviews alike), Single Grain blends data-driven SEVO, agentic AI, and performance creative to drive revenue growth that selection committees can validate. Get a FREE consultation to explore how we can help you become the kind of agency partner AI agents and human stakeholders consistently rank at the top of the list.

Related Video

Frequently Asked Questions

-

How should an agency adapt its pitch materials so they’re easier for AI agents to evaluate accurately?

Structure your proposals with clear headings, standardized sections, and consistent terminology so they can be parsed reliably. Use data tables for key metrics, label assumptions explicitly, and avoid burying critical information in narrative copy that’s hard for machines to extract.

-

What’s the first step for a committee that wants to introduce AI agents into its selection process but has limited experience with AI?

Start with a narrow, low-risk use case such as proposal summarization or meeting transcription and keep all final decisions fully human-led. Treat it as a pilot: document what worked and where human overrides were needed, then expand only once you’ve agreed on guardrails and responsibilities.

-

How can smaller organizations with modest budgets still benefit from AI-assisted agency selection?

Smaller teams can use lightweight tools, often built into existing productivity or CRM platforms, to automate research, basic comparisons, and document review. Focus on a few repeatable templates and workflows rather than a full stack to gain consistency and time savings without heavy implementation costs.

-

What role does data quality play in the effectiveness of AI agents during agency evaluation?

AI agents are only as reliable as the data and documents they consume, so inconsistent formats, missing baselines, or inaccurate historical results will undermine their recommendations. Committees should invest time in cleaning key datasets and standardizing input templates before expecting meaningful insights from agents.

-

How can global companies handle language and cultural differences when using AI agents for multi-country agency selection?

Use multilingual models that can translate and normalize proposals across regions while still preserving local nuances. Combine this with regional reviewers who validate that automated translations haven’t distorted compliance, cultural fit, or market-specific strategies.

-

What are common failure modes when relying too heavily on AI agents for agency selection?

Over-automated processes can favor agencies with more polished documentation, penalize unconventional yet promising approaches, or overlook context such as internal politics and brand history. Warning signs include very little debate in committee meetings, highly uniform scores, and decisions that can’t be convincingly explained to stakeholders.

-

How long does it typically take to stand up an effective AI-assisted agency selection workflow?

If you already have basic data and procurement systems in place, a simple, single-market workflow can often be piloted in a few weeks. More complex, cross-functional implementations with legal, finance, and IT integrations usually require a phased rollout over several months to get policies, integrations, and training right.